Why use o3-pro? Unlike general-purpose designs like GPT-4o that focus on speed, broad understanding, and making users feel great about themselves, o3-pro uses a chain-of-thought simulated reasoning process to commit more output tokens towards resolving complex issues, making it usually much better for technical difficulties that require much deeper analysis.

Its still not ideal.

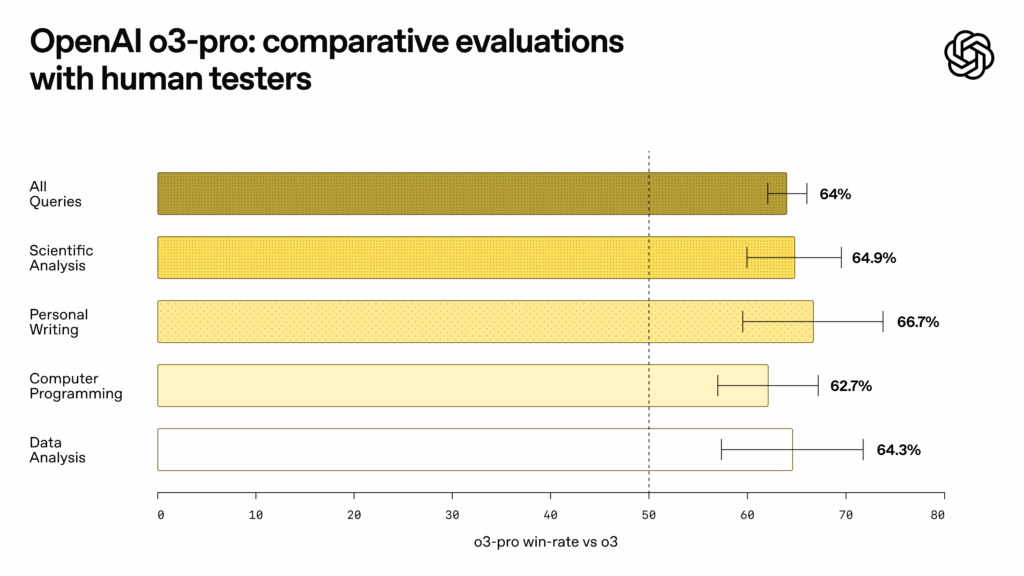

An OpenAIs o3-pro standard chart.

Credit: OpenAI Measuring so-called thinking ability is difficult considering that criteria can be easy to game by cherry-picking or training data contamination, however OpenAI reports that o3-pro is popular among testers, a minimum of.

In professional evaluations, reviewers regularly choose o3-pro over o3 in every tested classification and specifically in essential domains like science, education, programs, service, and composing assistance, composes OpenAI in its release notes.

Reviewers likewise ranked o3-pro regularly greater for clarity, comprehensiveness, instruction-following, and accuracy.

An OpenAIs o3-pro benchmark chart.

Credit: OpenAI shared benchmark results revealing o3-pros reported performance enhancements.

On the AIME 2024 mathematics competition, o3-pro accomplished 93 percent pass@1 accuracy, compared to 90 percent for o3 (medium) and 86 percent for o1-pro.

The model reached 84 percent on PhD-level science concerns from GPQA Diamond, up from 81 percent for o3 (medium) and 79 percent for o1-pro.

For programming tasks measured by Codeforces, o3-pro attained an Elo rating of 2748, surpassing o3 (medium) at 2517 and o1-pro at 1707.

When reasoning is simulatedIts simple for laypeople to be thrown off by the anthropomorphic claims of thinking in AI models.

In this case, as with the borrowed anthropomorphic term hallucinations, reasoning has actually become a term of art in the AI market that generally suggests devoting more calculate time to resolving an issue.

It does not necessarily mean the AI models systematically use logic or have the ability to build solutions to truly novel issues.

This is why Ars Technica continues to utilize the term simulated thinking (SR) to describe these designs.

They are imitating a human-style thinking process that does not necessarily produce the exact same outcomes as human thinking when faced with unique difficulties.

10

10